SPHERE - VILab

SPHERE : sensors for the home environment, helping people to remain healthy and living in their own homes. Computer vision and machine learning for Human Activity Recognition and Analysis in Home Healthcare. Research in the Visual Information Laboratory (VILab), University of Bristol.

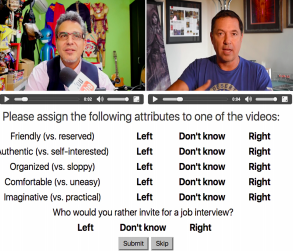

Chalearn LAP First Impressions - Speed Interviews

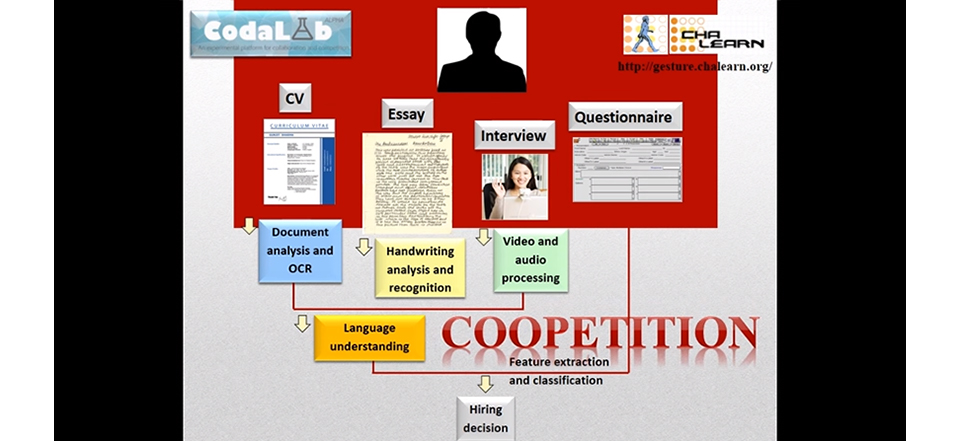

Devoted to all aspects of computer vision and pattern recognition for the analysis of human personality from images and videos. Co-located with the workshops there are challenges on first impressions, that is, recognizing personality traits for users after seeing a short video. These challenges and workshops belong to the Speed Interviews project.

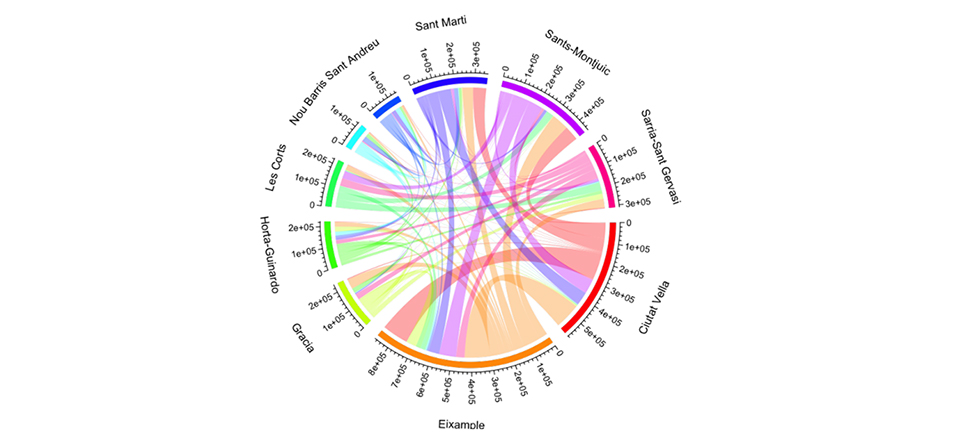

Human Mobility - Eurecat Catalonia Technology Centre

Call Detail Records for the studies about Human Mobility within the city of Barcelona. Big Data Tecnologies in the "Always Connected Era". Collaborative project with GSMA, MWC and Orange.

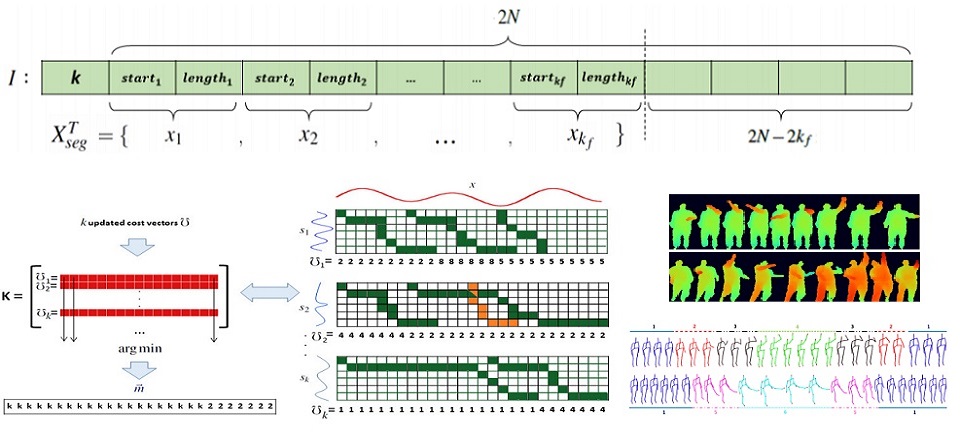

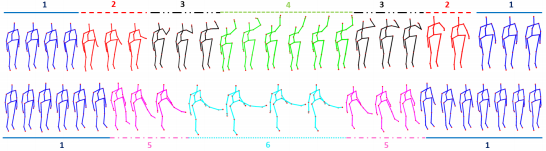

Evolved Dynamic Subgestures

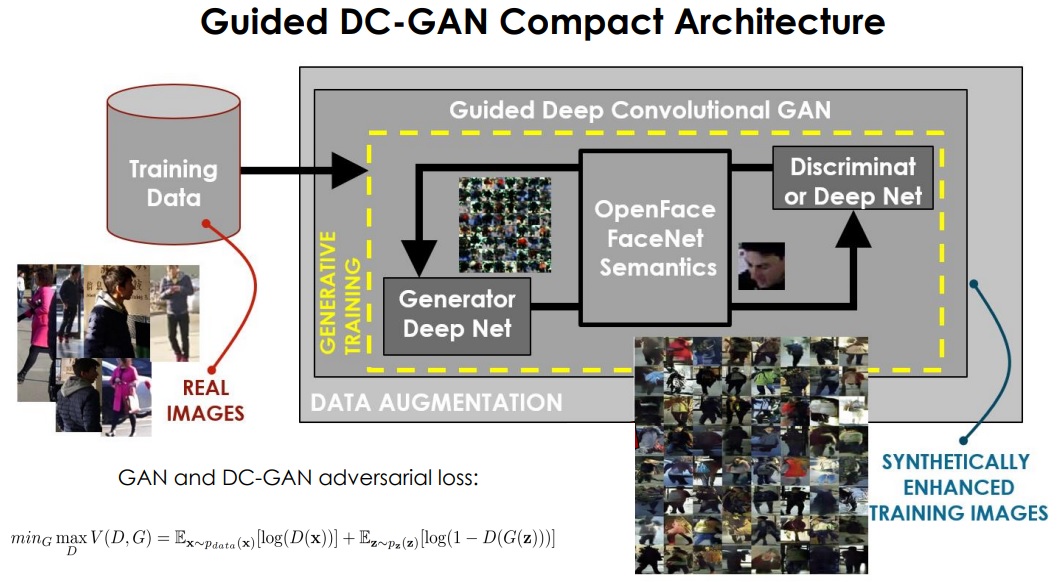

Framework for gesture and action recognition based on the evolution of temporal gesture primitives, or subgestures. This work is inspired on the principle of producing genetic variations within a population of gesture subsequences, with the goal of obtaining a set of gesture units that enhance the generalization capability of standard gesture recognition approaches. In our context, gesture primitives are evolved over time using dynamic programming and generative models in order to recognize complex actions.

[![]() GitHub Project]

GitHub Project]

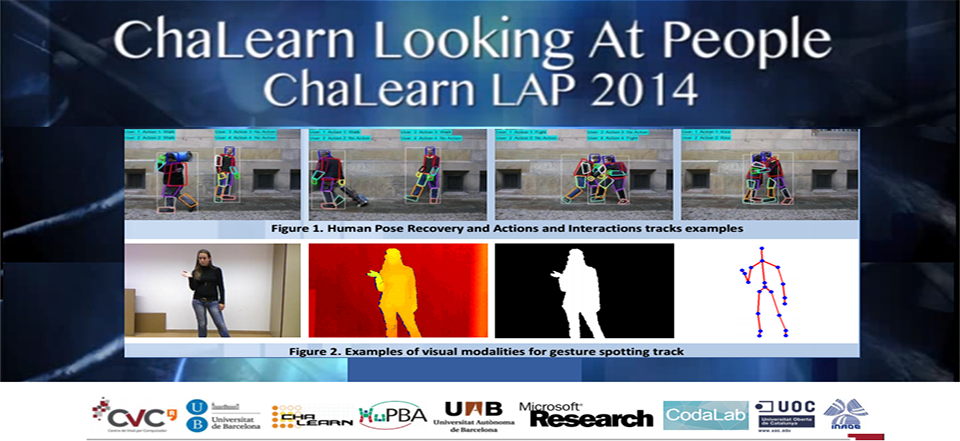

ChaLearn Looking at People Gesture Challenge

Organization of the ChaLearn Looking at People challenge, in conjunction with the ECCV, CVPR, and ICPR conferences.

Challenges on Human Pose Recovery, Action and interaction recognition on RGB, gesture spotting in RGB-Depth, and First Impression from Apparent Personality Traits. See more info at the gesture.chalearn.org.

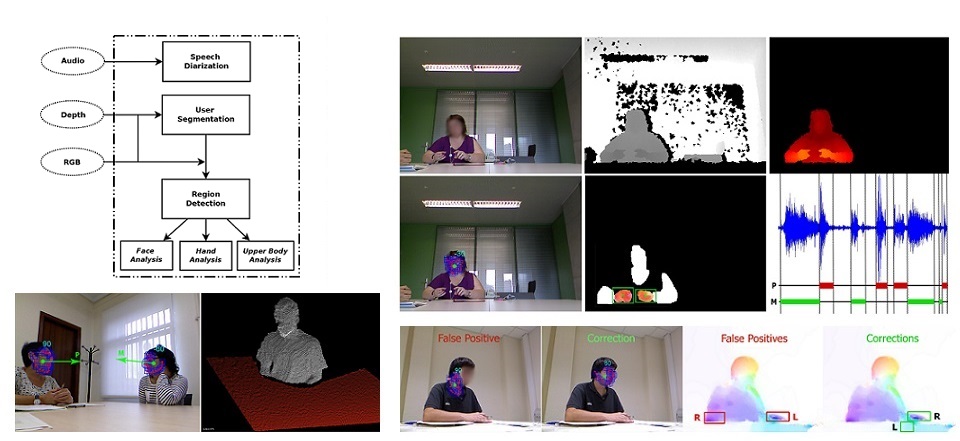

Behavior Analysis in Victim-Offender Mediations

Non-invasive ambient intelligence framework for the semi-automatic analysis of non-verbal communication applied to the restorative justice field. The system uses computer vision and social signal processing technologies in real scenarios of Victim-Offender Mediations, applying feature extraction techniques to multi-modal audio-RGB-depth data. A set of behavioral indicators that define communicative cues coming from the fields of psychology and observational methodology is automatically computed. The system is tested over data captured using the Kinect™ device in real world Victim-Offender Mediation sessions on the region of Catalonia in collaboration with the Dept. of Justice, Generalitat.

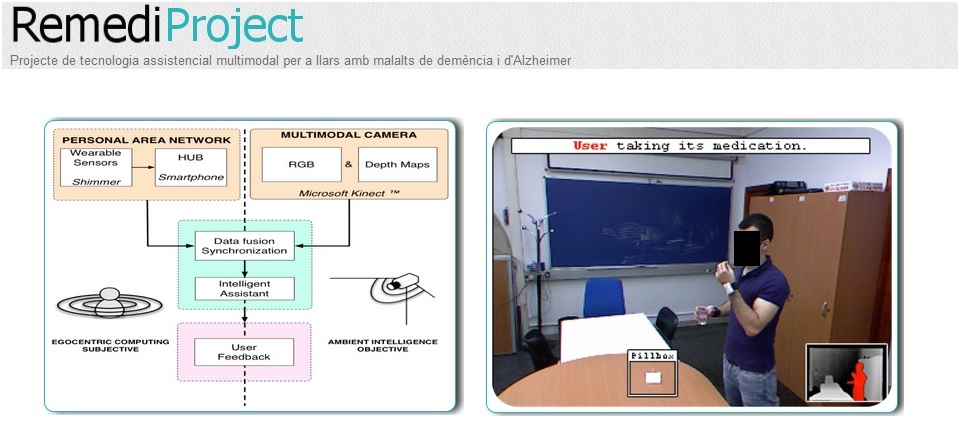

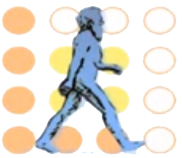

ReMedi: Multi-modal Assistance Technology in Elderly

ReMeDi is a project on the field of multi-modal home assistive technology for Dementia and Alzheimer's diseases developed at the University of Barcelona and funded by the RecerCaixa program for supporting scientific excellence. The goal of this project is to build a fully functional generic proof of concept using ubiquitous and egocentric computing for helping memory impaired people focused on the task of taking medication. This will be achieved by means of advancing research in human detection, pose recognition and action/gesture analysis using multi-sensor data fusion.

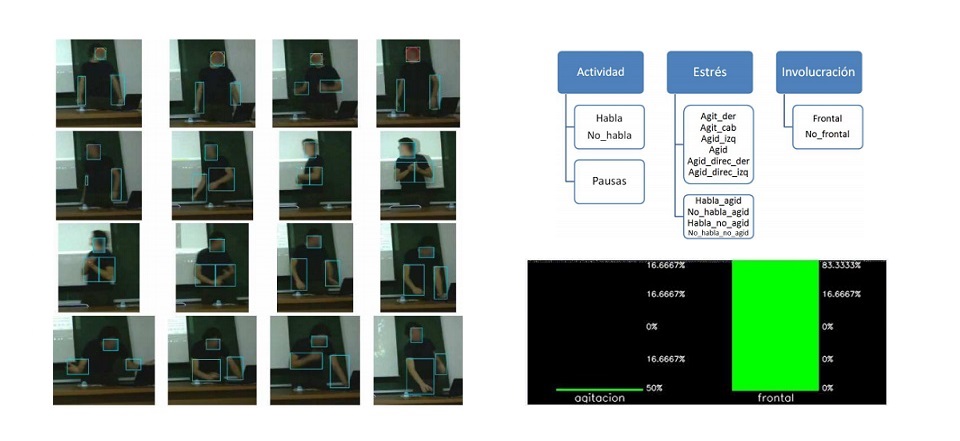

Behavior Analysis in Oral Expression

Software tool able to obtain high level features from people through the analysis of audiovisual data. The information obtained from both oral and non-verbal communication is of particular interest to analyse the social cues that appear on people during oral expression. These cues can be analysed in order to improve the quality of communication such as presentations or job interviews. The system has been applied to 15 final bachelor presentations of students to discriminate between the best and the worst presentations, correlating the results of the classification with the evaluation committee. The system has two versions, one that analyses a recorded video and other that makes the analysis it in real-time using the data captured from a WebCam.